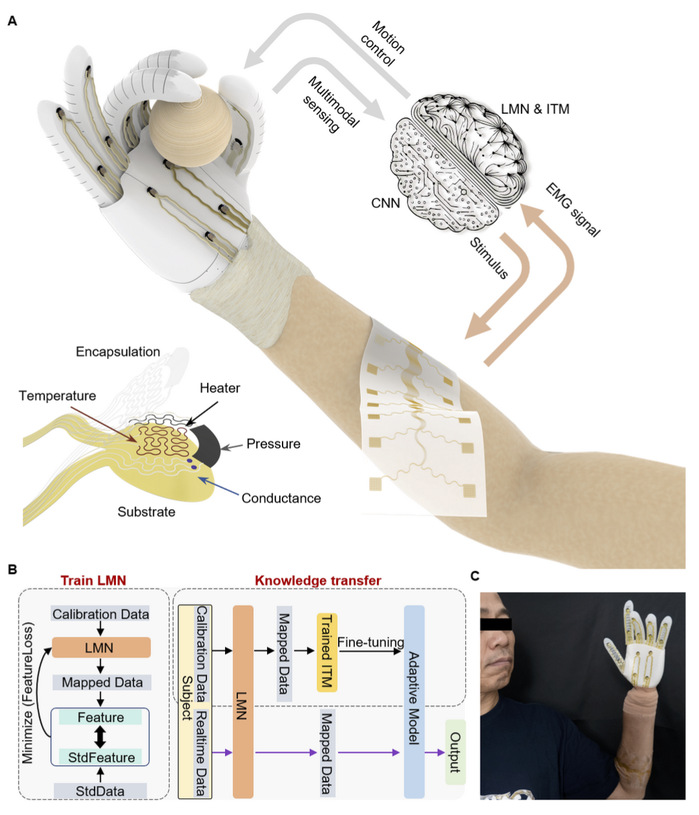

On September 10, the group led by Assistant Professor Yu You from the School of Biomedical Engineering at ShanghaiTech, along with collaborators, published a research paper titled “Printed sensing human-machine interface with individualized adaptive machine learning” in the journal Science Advances. This work introduces a advanced 3D-printed flexible human-machine interface technology. The breakthrough integrates multimodal sensing with individualized adaptive machine learning, successfully addressing challenges in gesture recognition and robotic tactile feedback, while demonstrating immense potential in intelligent prosthetics.

The human-machine interface (HMI) is key to achieving the fusion of “human-machine-environment,” with broad applications in intelligent manufacturing, healthcare, and beyond. However, existing technologies face multiple bottlenecks: traditional micro-nano fabrication is costly and inefficient; machine learning methods are sensitive to individual physiological differences, requiring tedious recalibration; and robotic perception is limited, lacking biomimetic multimodal tactile capabilities for precise recognition and interaction tasks.

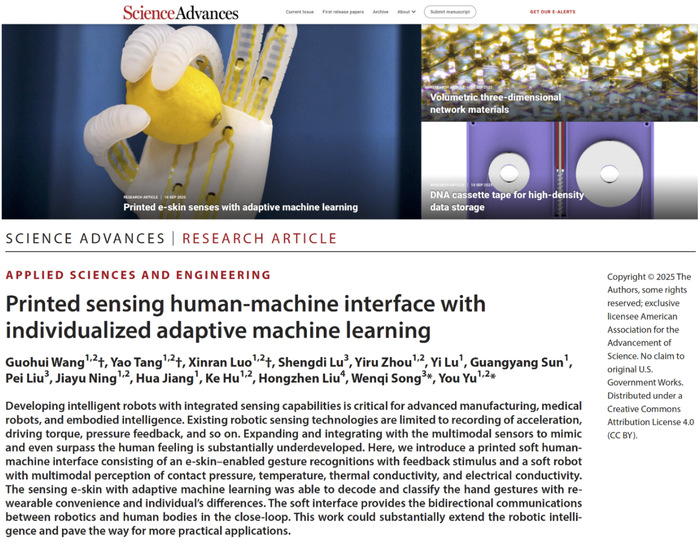

To tackle these issues, the team employed multi-material direct-ink writing combined with precision laser processing to achieve low-cost, large-scale production of flexible electronics and soft robotic sensor arrays. Micron-level precision printing enabled highly integrated flexible devices incorporating multimodal sensors for pressure, temperature, electrical conductivity, and more (Figure 1A). In this system, the pneumatic soft robot is driven by human surface electromyography (sEMG) signals, executing precise actions while using multimodal perception to distinguish object materials and provide real-time feedback to the human e-skin.

To address individual physiological variations, the team innovatively designed an adaptive machine learning algorithm combining a linear mapping net (LMN) with a knowledge transfer strategy (Figure 1B). Requiring only a few action calibrations, new users can achieve personalized model adaptation. In recognizing 14 complex gestures, the average accuracy reaches over 98%, with a latency as low as 0.1 seconds. Additionally, the team fused thermal conductivity and electrical conductivity sensing, using a convolutional neural network (CNN) for data analysis. The soft robot, equipped with the sensor array, accurately identifies up to 20 everyday items (such as metals and plastics) with over 98% accuracy, significantly enhancing robotic environmental perception and interaction capabilities.

In practical applications, the team tested the system on a volunteer with forearm amputation, verifying the closed-loop interface’s effectiveness and robustness (Figure 1C). Despite signal attenuation and delay in the residual limb muscles, the system still achieved 94.36% accuracy in gesture recognition, driving the soft robot to complete grasping tasks, highlighting its broad prospects in next-generation intelligent prosthetics.

Figure 1A: Schematic of the bidirectional flexible sensing interface for information acquisition and interaction.

Figure 1B: Adaptive machine learning algorithm enabling few-shot knowledge transfer for personalized model adaptation.

Figure 1C: Application of the intelligent prosthetic in a forearm amputee.

The paper’s co-first authors include Wang Guohui (2024 PhD student), Tang Yao ’25, and Luo Xinran (2023 master’s student). Prof. Yu You is one of the corresponding authors, with ShanghaiTech University as the primary affiliation.