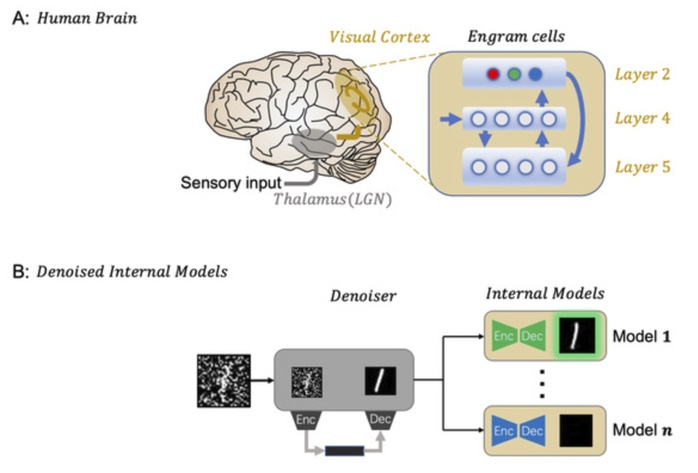

The great advances in deep learning techniques bring us a large number of sophisticated models that approach human-level performance in tasks such as image classification, speech recognition, and natural language processing. Despite its success, deep neural network (DNN) models are still vulnerable to adversarial attacks. Even with adding human-unrecognizable perturbations, the predictions of the underlying network model could be completely altered. On the other hand, the human brain, treated as an information processing system, enjoys remarkably high robustness. In human brains, there are two areas involved in the information processing pipeline: the thalamus and the primary visual cortex. Visual signals from the retina will travel to the lateral ganglion nucleus (LGN) of the thalamus before reaching the primary visual cortex. The LGN is specialized in handling visual information, helping to process different kinds of stimuli. An important finding reveals that Layer 2/3 of the primary visual cortex contain the so-called engram cells, which are only activated by specific stimuli.

Inspired by the visual pipeline, a joint research team led by SLST Associate Professor Guan Ji-Song and Professor Zhou Yi from University of Science and Technology of China (USTC), proposed a denoised internal model (DIM) consisting of two stages: a global denoising network (denoiser), and a set of generative autoencoders, to tackle character recognition tasks under complex noises. On September 29, their research results were published in a paper entitled “Denoised Internal Models: A Brain-inspired Autoencoder Against Adversarial Attacks”, in the journal Machine Intelligence Research.

In the first stage of DIM, the researchers seek a global denoiser that helps filter the “true” signals out of the input images, which is analogous to the function of LGN in the thalamus. The basic idea is that adversarial perturbations are generally semantically meaningless and can be effectively treated as noise in the raw images. The second stage of DIM consists of a set of internal generative models, which operate in a dichotomous sense. Each internal model only accepts images from a distinct category and will reject images from other categories. Upon acceptance, the internal model will output a reconstructed image, while it returns a black image if the input is rejected. In this way, the DIM model reflects the engram-cell behavior in the primary visual cortex.

In order to have a comprehensive evaluation of DIM′s robustness, the team conducted extensive experiments on MNIST (Modified National Institute of Standards and Technology) handwritten digits dataset using DIM and its variation biDIM (binarization) against a broad spectrum of adversarial attacks, and comparing their performance to SOTA models on MNIST. The results showed that DIM and biDIM outperforms those models on the overall robustness and have the most stable performance.

The present work is an initial attempt to integrate the brain′s working mechanism with the model design in deep learning. Guan Ji-Song and his team will explore more sophisticated realizations of the internal models and extend the model to real-world datasets in future work. Notably, several ShanghaiTech undergraduates were involved in this study and contributed to the idea and experiments. Liu Kaiyuan, a visiting student from Tsinghua University at SLST, and Li Xingyu from Shanghai Center for Brain Science and Brain-Inspired Technology are the co-first authors. Guan Ji-Song and Zhou Yi are the co-corresponding authors.