Syntactic parsing, including dependency parsing and constituency parsing, studies automatic discovery of internal structures of sentences (e.g., subject-predicate-object structures, various types of phrase structures, coordinating and subordinating relations between words). Syntactic parsing is a fundamental task in natural language processing (NLP) that can be used to provide useful information for many downstream NLP tasks.

Besides providing syntactic information for downstream tasks, the techniques and approaches in syntactic parsing can also be directly applied to downstream tasks. In recent years, there are many works casting downstream structured prediction tasks as syntactic parsing. One of the important downstream tasks is named entity recognition (NER), an information extraction task thataims to automatically identify entity text (e.g., the names of people, locations, and organizations) in a sentence. However, previous sequence-labeling-based NER models cannot be applied in nested structures, which is very common in real applications (e.g., organization name “ShanghaiTech University” contains location name “Shanghai”). Therefore, nested NER has recently been a hot topic in NER research.

Associate Professor Tu Kewei's group in the Vision and Data Intelligence Center of SIST focuses on the research of natural language processing and computational linguistics. Recently, the research group has published three papers at the 60th Annual Meeting of the Association for Computational Linguistics (ACL 2022), the top conference of the field, as well as a paper at the extended paper collection ACL Findings. ACL is also one of the most important conferences in the field of artificial intelligence.

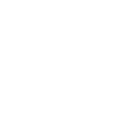

In the main conference paper “Headed-Span-Based Projective Dependency Parsing”, Tu’s group proposed a new parsing algorithm for projective dependency parsing based on headed spans (Fig. 1) and designed a cubic-time dynamic programming algorithm for parsing. Since a headed span contains all words within the subtree of a parse tree, headed spans modeling can leverage more subtree information than arcs modeling used in traditional methods, and thus can obtain a better performance. Second year master student Yang Songlin is the first author of the paper, and Prof. Tu is the corresponding author.

Figure 1. A projective dependency parse tree can be viewed as a set of headed spans. Each rectangle represents a headed span.

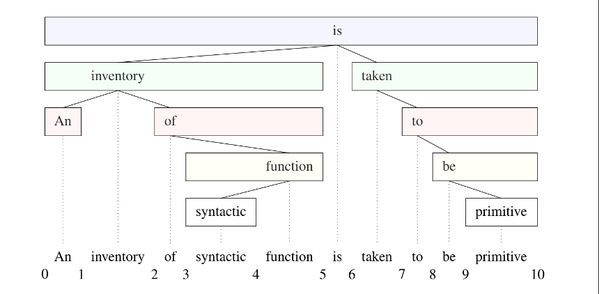

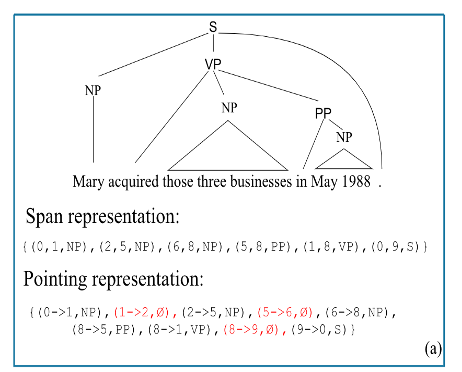

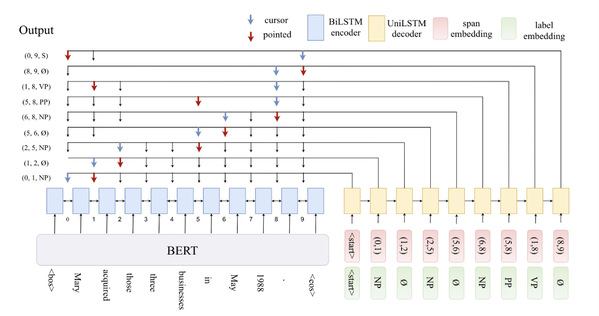

In the main conference paper “Bottom-Up Constituency Parsing and Nested Named Entity Recognition with Pointer Networks”, Tu’s group raised a unified method to tackle both constituency parsing and nested NER. Specifically, they designed a unified pointing representation (Fig. 2) for encoding constituency parsing trees and nested named entities, and proposed a pointer-network-based decoding method (Fig. 3) with structured consistency. Songlin Yang, a class 2020 master student, is the first author of the paper, and Dr. Tu is the corresponding author.

Figure 2. Pointing representations for (a) a constituency parse tree and (b) nested named entities

Figure 3. Decoding with pointer networks

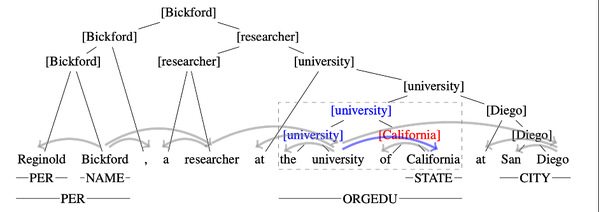

In the main conference paper ‘Nested Named Entity Recognition as Latent Lexicalized Constituency Parsing’, Tu’s group studied an approach casting nested NER as lexicalized constituency parsing, and pointed out that headwords (such as “University” in “ShanghaiTech University”) can be informative for NER. To model headwords, Tu’s group took the advantages of lexicalized constituency tree structure (Fig. 4), in which each constituent span is annotated by a headword. They viewed nested named entities as partially observed lexicalized trees, and leveraged the classic Eisner-Satta algorithm for efficient learning and decoding. Second year master student Lou Chao is the first author of the paper, and Prof. Tu is the corresponding author.

Figure 4. An example lexicalized constituency tree

The four published papers are all supported by the National Natural Science Foundation of China and the Shanghai Talent Development Fund.