Prof. Xu Lan’s research group from the Visual & Data Intelligence (VDI) Center focuses on top-tier research at the intersection of computer vision, computer graphics and computational photography, with applications in human digital twin technology, light field reconstruction, artificial reality and artificial intelligence for digital entertainment. Recently, the group has published two papers in the IEEE Conference on Computer Vision and Pattern Recognition 2021 (CVPR 2021), demonstrating their latest achievements in computer vision. The following are brief introductions.

1. NeuralHumanFVV: Real-time neural volumetric human performance rendering using RGB cameras

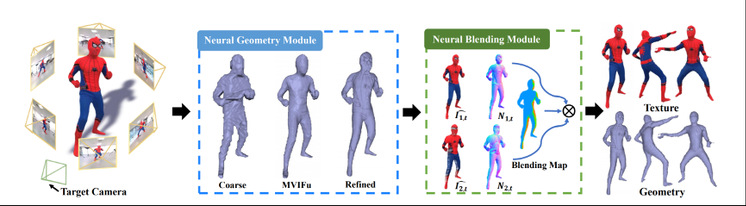

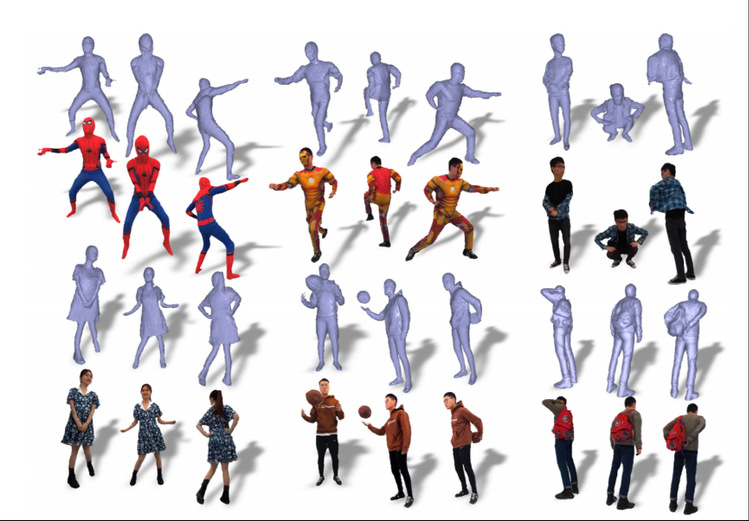

4D reconstruction and rendering of human activities are critical for immersive VR/AR experiences. Recent advances still fail to reproduce fine geometry and texture results with the level of detail present in the input images from sparse multi-view RGB cameras. Xu’s lab proposed NeuralHumanFVV, a real-time neural human performance capture and rendering system to generate both high-quality geometry and photo-realistic texture of human activities in arbitrary novel views. In this system, they proposed a neural geometry generation scheme with a hierarchical sampling strategy for real-time implicit geometry inference, as well as a novel neural blending scheme to generate high resolution (e.g., 1k) and photo-realistic texture results in the novel views. Furthermore, they adopted neural normal blending to enhance geometry details and formulate the neural geometry and texture rendering into a multi-task learning framework. Extensive experiments have demonstrated the effectiveness of the system in achieving high-quality geometry and photo-realistic free view-point reconstruction for challenging human performances.

Third year Master student Suo Xin is the first author, Professor Xu Lan is the corresponding author.

Figure 1. The pipeline of NeuralHumanFVV consisting of neural geometry module and neural blending module

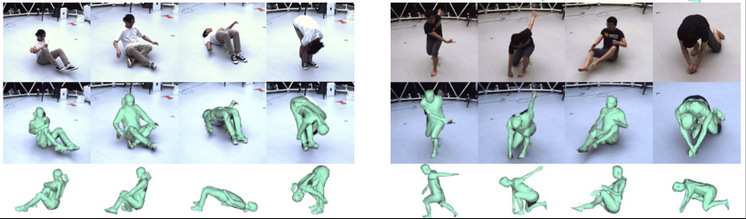

Figure 2. The reconstruction results of NeuralHumanFVV System

2. Complex human motions capture based on a multi-modal monocular camera

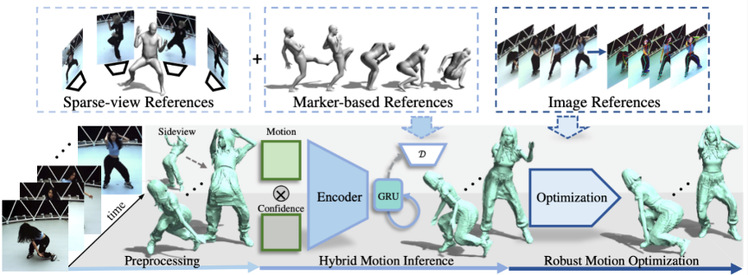

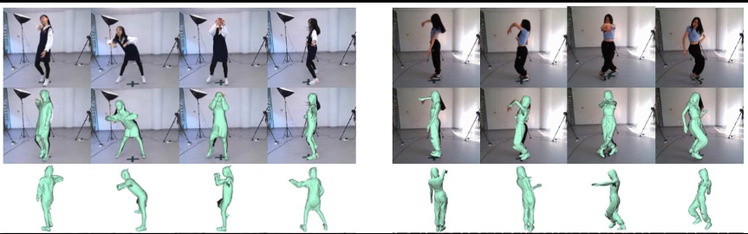

Capturing human motions in computer vision is critical for numerous applications, but current methods struggle with complex motion patterns and severe self-occlusion under the monocular setting. To tackle the problem, Xu’s group proposed ChallenCap -- a template-based approach to capture challenging 3D human motions using a single RGB camera in a novel learning-and-optimization framework, with the aid of multi-modal references. Related research results were published in a paper entitled “ChallenCap: Monocular 3D Capture of Challenging Human Performances using Multi-Modal References”. In this approach, a hybrid motion inference network stage containing a generation network is proposed. It can extract the motion details from the pair-wise sparse-view references by using a temporal encoder-decoder, as well as extracting specific challenging motion characteristics from the unpaired marker-based references by using a motion discriminator. Xu’s group further adopted a robust motion optimization network stage to increase the motion tracking accuracy, which utilized the learned motion details from the supervised multi-modal references as well as the reliable motion hints from the input image references. In addition, the researchers built a new dataset with plenty of challenging motions inside, and extensive experiments on the new dataset demonstrated the effectiveness and robustness of the approach in capturing challenging human motions.

Second year Master student He Yannan is the first author, Professor Xu Lan is the corresponding author.

Figure 3. The pipeline of ChallenCap with multi-modal references

Figure 4. Human motions tracking results