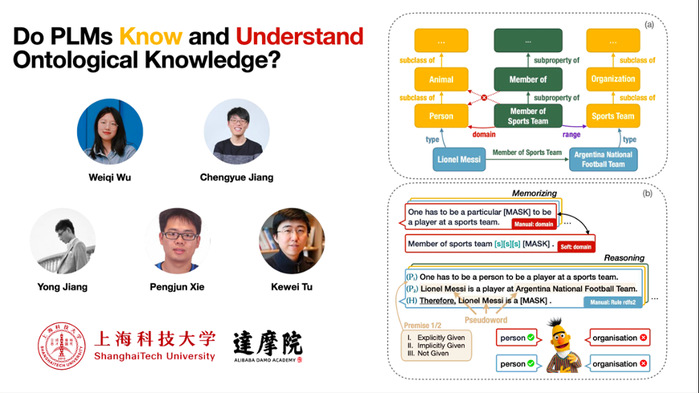

At the 61st Annual Conference of the International Association for Computational Linguistics (ACL), the paper submitted by Associate Professor Tu Kewei’s group, entitled “Do PLMs Know and Understand Ontological Knowledge?”, was honored with the Outstanding Paper Award. SIST graduated student Wu Weiqi ’23 is the first author, doctoral student Jiang Chengyue ’19 is the second author, and Prof. Tu is the corresponding author. Alibaba Damo Academy is the collaborating institution.

Prof. Tu has been long engaged in the research of natural language processing, machine learning, and artificial intelligence, and has published 100 articles at major conferences and in journals including ACL, EMNLP, NAACL, AAAI. He also served at several NLP and AI conferences and was an action editor of ACL Rolling Review.

In this paper, the group explored how well the pretrained language models (PLMs) knew and understood ontological knowledge, bringing new advances in the systematic analysis of PLMs. They probed the ability of three PLMs—BERT, RoBERTa, and ChatGPT—in memorizing ontological knowledge, including types of entities, hierarchical relationships among classes and properties, and domain and range constraints of properties. In order to determine whether the models can further understand this knowledge, the researchers studied whether the models can reliably perform logical reasoning with given knowledge according to ontological entailment rules.

The findings of their experiments revealed that encoder-only models like BERT and RoBERTa did exhibit some capability to encode ontological knowledge and to reason with the memorized knowledge, but they still exhibited some limitations in memorization and full understanding of the ontological knowledge. In comparison, the decoder-only model, ChatGPT, demonstrated a noteworthy improvement in both aspects even though it’s still far from perfect, pointing out the potential and new research direction for further advances in the optimization of PLMs.

ACL enjoys a distinguished position as the most influential international academic conference in the field of computational linguistics and natural language processing. This year, the conference received nearly 5,000 submissions, with an acceptance rate of 20.7%. Including the award-winning paper, Prof. Tu’s team published six papers at the main conference and two papers in the Findings category in ACL 2023, covering various topics such as syntactic parsing, information extraction, language models, and basic model architectures. It is worth mentioning that in addition to the papers published by Prof. Tu’s group, another study the team participated in was published by Alibaba Damo Academy in a paper entitled “DAMO-NLP at SemEval-2023 Task 2: A Unified Retrieval-Augmented System for Multilingual Named Entity Recognition”, winning the Best System Paper Award in SemEval (International Workshop on Semantic Evaluation), one of the workshops of ACL 2023.

Author Biography

Wu Weiqi, the first author of the paper, received her bachelor’s degree in Computer Science in 2023. Throughout her undergraduate study, she passionately carried out research in the fields of artificial intelligence and natural language processing under the guidance of Prof. Tu Kewei. Her main research interest is large language models and she has published multiple papers at top conferences in the field of natural language processing.

Jiang Chengyue, the second author of the paper, is currently a Ph.D. student in Prof. Tu’s group. He earned his bachelor’s degree at ShanghaiTech in 2019. His research interest is in the combination of symbolic knowledge and neural network models, information extraction, and large-scale PLMs. He has published several papers at top international conferences in natural language processing, including ACL, EMNLP, and EACL.