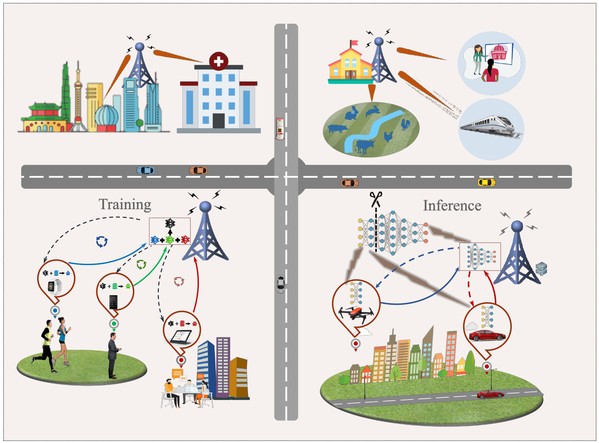

Prof. Shi Yuanming’s research group and collaborators from HKUST and HKPolyU have surveyed the key techniques for improving the communication efficiency of performing artificial intelligence (AI) training and inference tasks at network edges, a.k.a., edge AI. Edge AI is envisioned to promote the paradigm shift of futuristic 6G networks from “connected things” to “connected intelligence”. The exciting results have recently been published in IEEE Communications Surveys and Tutorials, entitled “Communication-Efficient Edge AI: Algorithms and Systems” by Shi et al.

Communication-Efficient Edge AI Algorithms Design

The unique distributed structure and limited resources of edge AI make it fundamentally distinct from the model training in cloud center. Due to the limited communication bandwidth, the high communication overhead resulting from frequent information exchanges across distributed devices for model training becomes one of the key bottlenecks. The paper describes a thorough investigation of the various optimization algorithms and the various approaches to improve their communication efficiency from the perspective of reducing communication rounds and reducing the required communication bandwidth in each round. The optimization algorithms are categorized into four classes: zeroth-order method, first-order method, second-order method, and federated optimization method. There are often different types of constraints in real-world applications, such as data privacy and maximum rounds of information exchange. This paper provides basic algorithmic frameworks based upon which one is able to design a customized edge AI algorithm for the specific application. Also, the extensive reference list lays a groundwork for future research.

Communication-Efficient Edge AI Systems Design

This paper also surveys the system-level approaches for improving communication efficiency, according to the different system architectures of edge AI. The main system architectures of edge AI are summarized into four categories, i.e., data partition based edge training systems, model partition based edge training systems, computation offloading based edge inference systems, and general edge computing systems. In recent years, different system architectures have been studied and a number of communication efficiency enhancement techniques have thus been proposed, such as over-the-air computation, pliable index coding, coded computing, and cooperative transmission. It demonstrates that the availability of source data and the structure of AI tasks are two main influences on determining the architecture of edge AI systems. There are many efforts to fit the constraints (e.g., storage, computation, communication, privacy, etc.) in various applications given the system architecture, which provide valuable references for both industry and academia.

Prof. Shi Yuanming was the first author of this article, and ShanghaiTech was the first completion unit. This work was supported by grants from the National Natural Science Foundation of China, and ShanghaiTech University.

Read more at: https://ieeexplore.ieee.org/document/9134426/

Figure 1. Illustration of edge AI, including edge training and edge inference.

Figure 2. Illustration of different optimization methods for model training: (a) zeroth-order methods; (b) first-order methods; (c) second-order methods; (d) federated optimization methods.

Figure 3. Computation offloading based edge inference systems: (a) server-based edge inference; (b) device-edge joint inference.