Recently, School of Information Science and Technology Professor Yu Jingyi and Assistant Professor Gao Shenghua’s research group’s work on personalized saliency detection problem was published online in IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) in an article titled, ‘Personalized Saliency and Its Prediction.’

Nearly all existing visual saliency models so far have focused on predicting a universal saliency map across all observers. Yet psychology studies suggest that visual attention of different observers can vary significantly under specific circumstances, especially when a scene is composed of multiple salient objects. To study such visual attention pattern across observers, the researchers first constructed a personalized saliency dataset and explored correlations between visual attention, personal preferences, and image contents. Specifically, they proposed to decompose a personalized saliency map (referred to as PSM) into a universal saliency map 9 (referred to as USM) predictable by existing saliency detection models and a new discrepancy map across users that characterizes personalized saliency. They then presented two solutions towards predicting such discrepancy maps, i.e., a multi-task convolutional neural network (CNN) framework and an extended CNN with Person-specific Information Encoded Filters (CNN-PIEF).

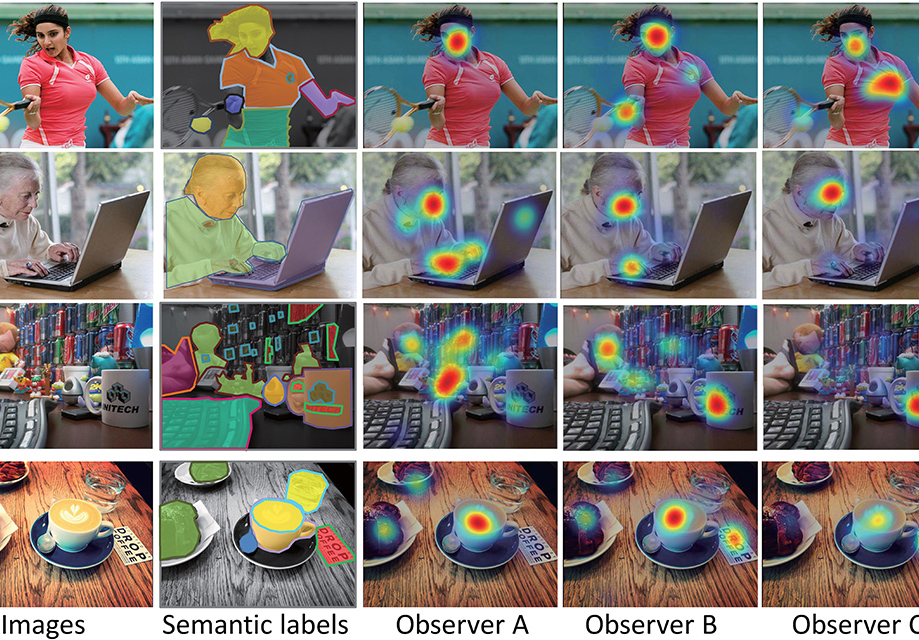

Almost all previous approaches focused on exploring a universal saliency model, i.e., to predict potential salient regions common to observers while ignoring their differences in gender, age, personal preferences, etc. Such universal solutions are beneficial in the sense they are able to capture all “potential” saliency regions. Yet they are insufficient in recognizing heterogeneity across individuals. Examples in Fig. 1 illustrate that while multiple objects are deemed highly salient within the same image, e.g., human face (first row), text (last two rows, ‘DROP COFFEE’ in the last row) and objects of high color contrast (zip-top cans in the third row), different observers have very different fixation preferences when viewing the image. The researchers use the term universal saliency to describe salient regions that incur high fixations across all observers via a universal saliency map (referred to as USM); in contrast, they use the term personalized saliency to describe the heterogeneous ones via a personalized saliency map (referred to as PSM).

Personalized saliency detection hence can potentially benefit various applications. For example, in image retargeting, texts on the table in the fourth row in Fig. 1 should be preserved for observer B and C when resizing the image but can be eliminated for observer A. In VR content streaming, a user-correlative compression algorithm can be realized: data compression algorithms can be designed that preserve personalized salient regions but further reduce the rest to minimize transmission overhead. Finally, in advertisement deployment, the location of the advertising window can be adapted according to the predicted personal preferences.

To predict PSM, the wisdom of USM motivated the researchers to decompose PSM as the summation of USM and a discrepancy between PSM and USM. They proposed two solutions to predict such discrepancy: i) a Multi-task CNN framework for the prediction of this discrepancy; ii) personalized saliency is closely related to each observer’s personal information (gender, race, major, etc.). Therefore, as such discrepancy is related to image content and identity, the researchers proposed to concatenate the USM and RGB image and feed them to a CNN-PIEF to predict this discrepancy. Extensive experiments validated the effectiveness of their methods for personalized saliency prediction.

The first author is PhD candidate Xu Yanyu and Gao Shenghua is the corresponding author. Wu Junru, Li Nianyi and Yu Jingyi are co-authors. The work was supported by the National Natural Science Foundation of China, ShanghaiTech start-up funding, and Li Nianyi is also supported by US National Science Foundation grants.

Read more at: https://ieeexplore.ieee.org/document/8444709/

If an observer’s personalized interestingness (personalized saliency) for a scene is known, tailored algorithms can be potentially designed to cater to his/her needs. For example, in image retargeting, texts on the table in the fourth row in Fig. 1 should be preserved for observer B and C when resizing the image, but can be eliminated for observer A.